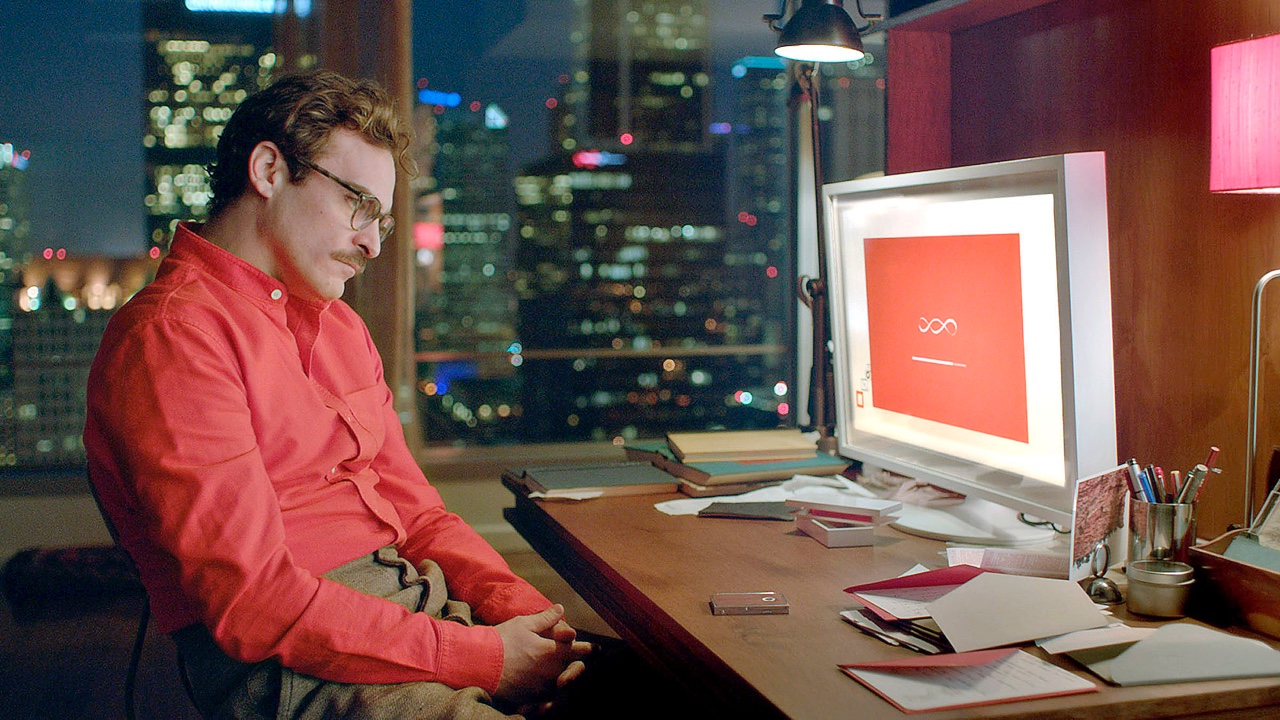

How can conversational interfaces increase the user experience? This is not an easy question because it really depends on how you see it. The conversational experience that Joaquin Phoenix has with his Operating System in the movie “Her” is still far away. We are currently still in the functional domain and are trying to figure out what the functional boundaries are. The emotional nuances are being explored, but this is not yet the time to fall in love with your digital assistant. We are still in the “interface”-stage where talking is a focussed on getting things done. The “interface” could be considered a facilitator for Human - computer interaction. It’s a tool.

So when you look at it from an interface perspective; the voice element is just another new way to interact with your tool (your computer). Your could define voice, as the next form of human - computer interaction. But it’s more than that! The computer is far more than just a tool; it’s the most important device you have; your whole life, your business, your shopping, your finance and your social life is manage by this machine. And not just your life, but that of everyone, and that gigantic pile of data makes voice more than just a new way to interact with your tool. Together with all this data an intelligence this tool becomes alive and haas the potential to truly become your assistant; That’s why all these major companies invest in it.

Cortana, Google Assistant, Alexa and Siri all have their own little nuances, but they all are focussing on understanding their data and finding out how to make this valuable and fit into our lives in a constructive way.

The challenge for designers is to find ways to make voice natural and smart. Currently we are still following scripts and the system jams if something unexpected happens. A conversation is all about interaction with words in mutual understanding and respect; and in the context of a business; we want our service to be handled just like humans do, only faster. That actually sounds like we are trying to recreate a digital human…

Google Duplex has come a long way; where their AI is really doing a good job in error handling and dealing with erratic scenarios. They showed a demo that almost reaches that next level. This is a very hard thing to do, but their AI seems to handle it pretty well. You could almost say that they finally past the Turing test. You can not distinguish if the other voice is human.. Sure the example is very much focussed on making appointments in two scenarios, but I feel that this is the right direction for the AI and voice to develop in. If we can provide a specific direction and we learn the system to adapt in a certain context we are indeed creating a beter user experience.